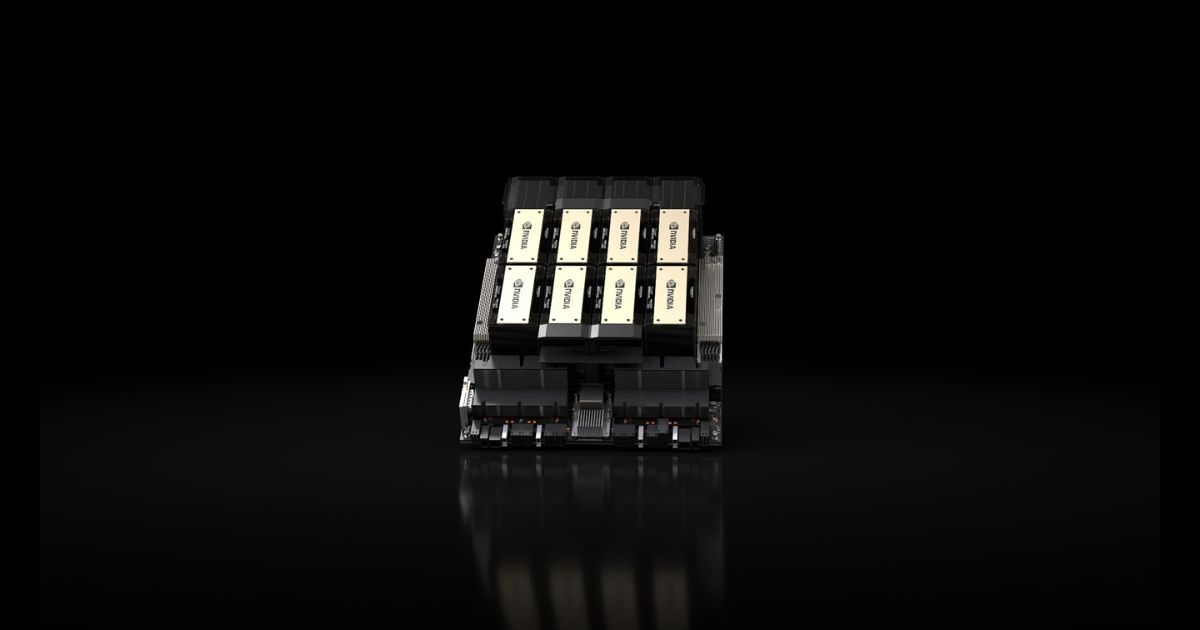

NVIDIA has announced the launch of its latest HGX H200 GPU, which is aimed at training Artificial Intelligence systems. The H200 is the successor to the HGX H100 and brings with it several performance enhancements. As per the company, the H200 will be compatible with both the hardware and software of the H100 systems.

Among the first cloud service providers to deploy H200-based instances next year include Google Cloud, Amazon Web Services, Microsoft Azure, and Oracle Cloud Infrastructure. More such providers include CoreWeave, Lambda, and Vultr. We look at the top features and specifications of the NVIDIA HGX H200 chipset.

NVIDIA HGX H200 Specifications And Features

The NVIDIA H200 is based on the company’s Hopper architecture, which features the H200 Tensor Core GPU. NVIDIA H200 is the first GPU to come with HBM3e, which offers 141GB of memory at 4.8 terabytes per second. The large memory is aimed at helping in the acceleration of generative AI and large language models (LLMs). To give a perspective, the memory offered by the H200 is almost double the capacity compared to its predecessor NVIDIA A100 and the bandwidth has been enhanced by 2.4x.

“With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges,” said Ian Buck, vice president of hyperscale and HPC at NVIDIA in a press release.

The company says users can experience nearly double the inference speed on Llama 2, a 70 billion-parameter LLM, when compared to the H100. The NVIDIA HGX H200 server boards will be available in four- and eight-way configurations. In addition, it will also be available in the NVIDIA GH200 Grace Hopper Superchip with HBM3e.

The existing systems of NVIDIA’s partner server companies like Dell Technologies, Hewlett Packard Enterprise, ASUS, Lenovo, and more can be updated with the H200. More such companies include ASRock Rack, Eviden, GIGABYTE, Ingrasys, QCT, Supermicro, Wistron and Wiwynn.

However, some of the first companies to deploy the H200 in 2024 will be Google Cloud, Amazon Web Services, Microsoft Azure, Oracle Cloud Infrastructure, CoreWeave, Lambda, and Vultr.

Thanks to the company’s NVLink and NVSwitch high-speed interconnects, the HGX H200 enables high performance on various application workloads such as LLM training. It can also offer inference for the largest models beyond 175 billion parameters.

NVIDIA HGX H200 eight-way configuration offers over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory. The chipset will be available starting in the second quarter of 2024.

The new H200 comes over a year after H100, which was made official in March 2022. The NVIDIA H100 was the first GPU by the company, which was based on Hopper architecture. The HGX H100 features 80 billion transistors and is based on TSMC’s 4N process. It comes with features like Transformer Engine and NVIDIA NVLink interconnect. The last year’s GPU was aimed at accelerating large-scale AI and HPC as well.