Google has announced a new experimental large language model (LLM) named VideoPoet, which can generate videos from text, images, and even edit videos all with the help of artificial intelligence. It can also create sound for the video, based on the context of input provided by the user. Scientists at Google say that VideoPoet is a first-of-its-kind product which can generate coherent motions–which is still a challenge when it comes to AI-based video generation.

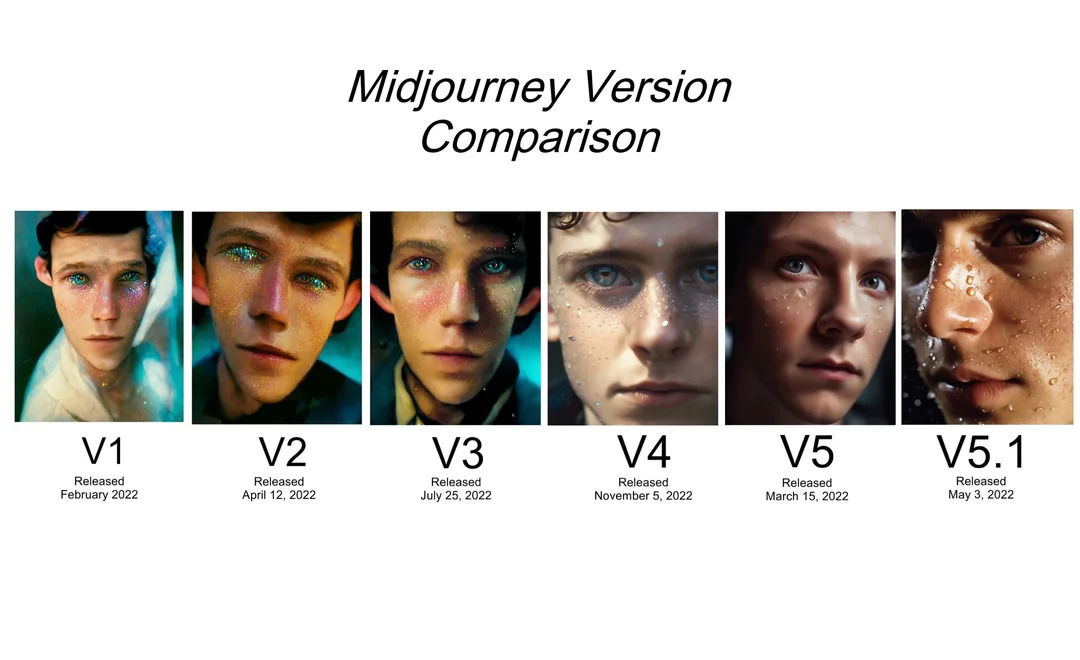

Although we have already seen the potential of AI content generators with the likes of Midjourney and DALL-E 3, these services cannot add enough motion to videos as compared to the details that they generate in images. Here’s where Google’s VideoPoet solves the problem as it is specially trained on multiple large language models (LLMs) to create animated content.

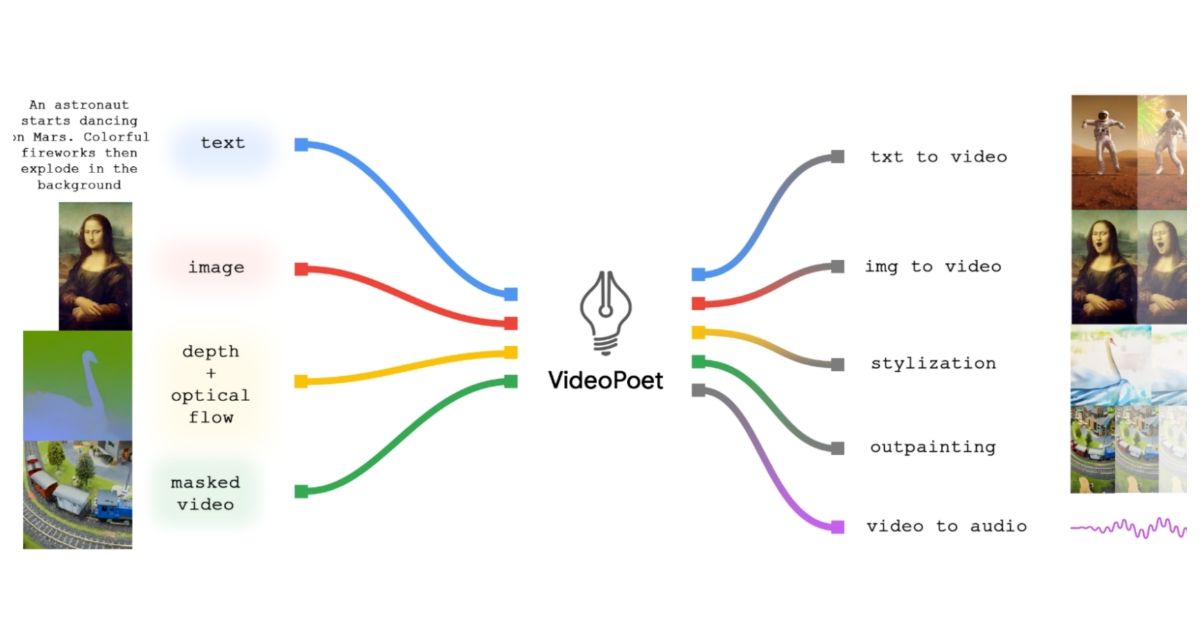

VideoPoet’s list of features is not just limited to video generation. Google has listed all the capabilities of its new AI bot which include:

- Text-to-video: Generate videos using text input

- Image-to-video: Create animated videos from images

- Video Editing: Adding artefacts to videos using AI, including moving objects

- Stylization: Adding special effects to videos such as colour grading, clip art styling, and more

- Inpainting: Adding extra details to videos such as background, and filling in empty and masked spaces

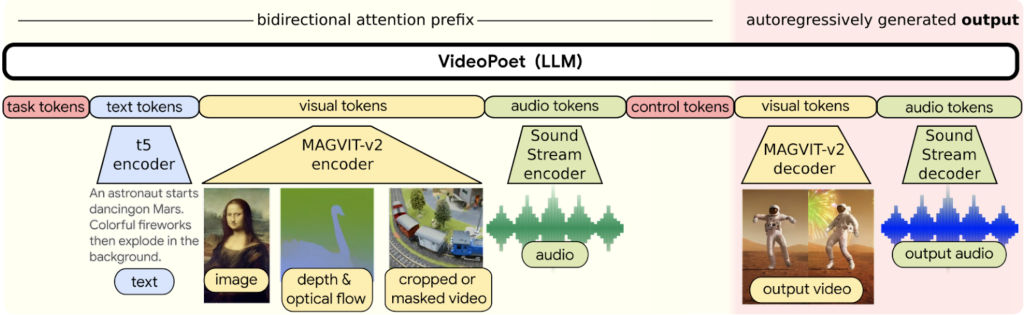

The biggest differentiator between VideoPoet and other AI content generators is the way they handle filling out blank spaces. While Midjourney uses a diffusion-based process to generate backgrounds from random noise, VideoPoet uses visual tokens which are trained to adapt to the context of the video.

VideoPoet then uses these visual tokens to process corresponding audio tokens using a sound stream encoder. This allows it to produce audio that matches the main subject and theme of the video.

VideoPoet: How it Creates ‘Realistic’ Videos

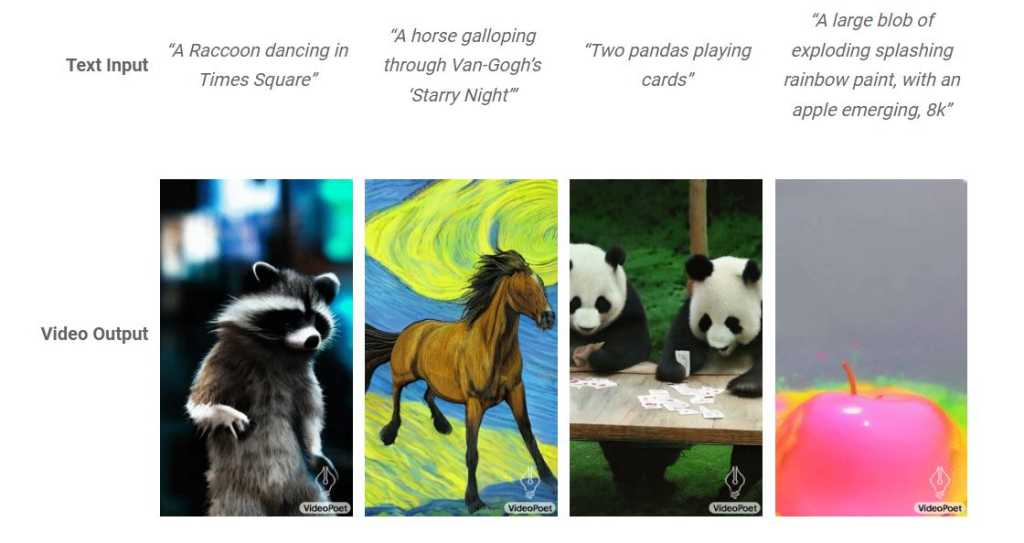

VideoPoet can create pixels in videos by identifying the subject and object in the video, just like how ChatGPT and Google Bard curate responses using a combination of words. This helps VideoPoet to create more realistic videos, as compared to blurry or less detailed animations from other services.

The applications of VideoPoet are endless. Considering that it is able to fill out black spaces, and even add extra artefacts to moving objects, VideoPoet could change the entertainment industry where CGI is still a challenging task.

Google says that VideoPoet also has the potential to generate longer videos. As of now, it can create around 8-10 seconds of videos with complete details.

The company has showcased some short clips on its website with various examples of text-to-video generation. You can view all the samples on the Google Research blog.

However, VideoPoet is not available for access as of now, as this is just an announcement.

Although the current clips displayed by Google may not look very appealing, the technology behind VideoPoet is fascinating in itself. We have already seen the progress made by Midjourney in the last two years, where it went from creating pixelated images to high-quality portraits. Hence, we can expect VideoPoet to come with more powerful features during its public release.