Every year, on March 8, my phone explodes with the generic “Happy Women’s Day” greetings. While I appreciate the sentiment, it somehow feels hollow. Don’t get me wrong, celebrating gender parity and women’s rights is crucial. But, instead of dwelling on the usual women’s issues, I want to talk about a virtual evil that is on the rise, thanks to the rise of AI – deepfakes.

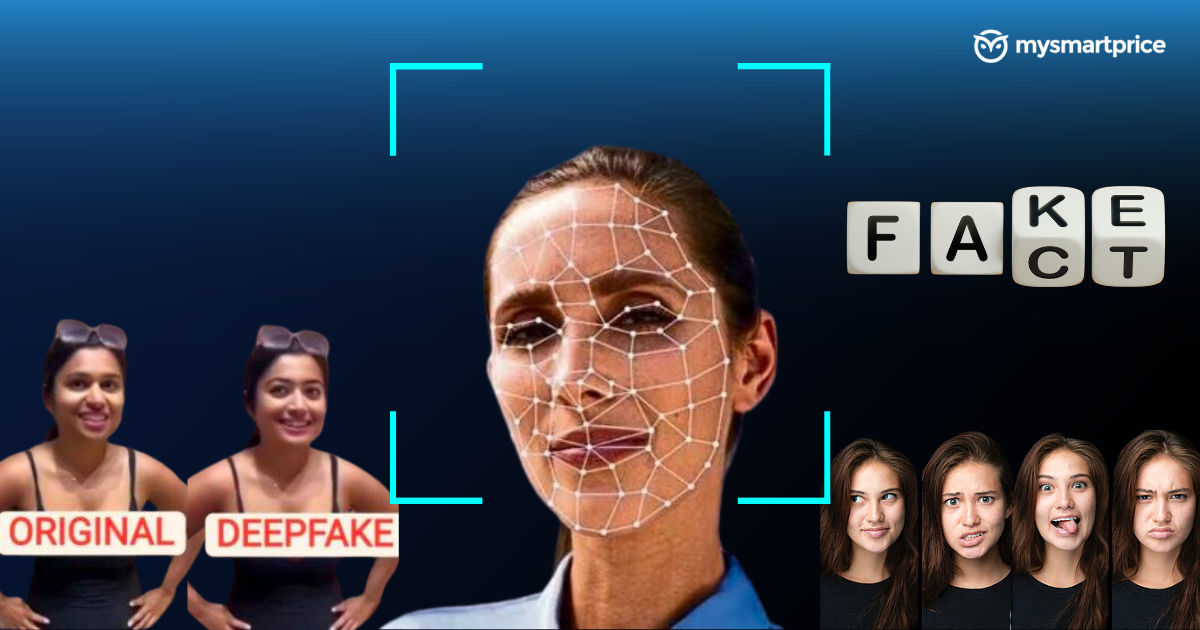

Having written about technology for nearly seven years, I’ve never felt this vulnerable to social media. These hyperrealistic manipulated videos and images are a ticking time bomb, especially for women. We saw it with the Taylor Swift incident, and even Rashmika Mandanna — celebrities are easy targets. But the real threat lurks on platforms like Instagram, where deepfake accounts are multiplying.

The Deceptive Influencer: An Anecdote

A colleague of mine recently sent me an Instagram profile of a young woman. At first glance, I was a little confused. I wondered, “What’s so special about this influencer with 93.3k followers?” He then asked me to watch all of her 40 or so posts closely. The reason for my confusion remained unclear.

Then he pointed out these were all AI-generated deepfakes. After a closer inspection, I could indeed spot a few unnatural things, such as the eyes and the glow of the AI influencer’s skin.

The comments were even scarier, where people genuinely thought this was a real woman. Some even proceeded to ask her number. This tells how the technology has progressed over the years and how it is so simple to create one.

Deepfakes Are a Gendered Threat

The conventional AI-generated reels, with obviously fake facial features and voices, are usually harmless. They are also “designed” to be easily spotted, and taken lightly, often as a joke. Because let’s face it, deepfakes are not always entirely sexually explicit and do have some use cases. But, we are so engrossed in finding the “LOL” moment that we forget the kind of security issues such content raises, especially for women. And this points towards the fact that deepfakes are more of a gendered problem.

A report by WIIS (Women in International Security) reveals how women are disproportionately targeted for deepfake attacks, often sexual (also termed sexual photoshopping). It highlights:

The harm suffered is the same as more “traditional” forms of image-based sexual violence.

This hits the nail on the head. It is safe to say that the level of harassment a woman would feel from the “mere” eve-teasing is the same (and even more) as discovering a morphed video or image.

Trauma is one of the major repercussions of this. Yes, we will be advised to keep our profiles protected and our social media conduct at bay, but we all know that whatever safety protocols we follow, such content will continue to be created. And we probably wouldn’t even know of it until it goes viral.

Will This Malice Ever Be Curbed?

The Taylor Swift incident enraged many and questioned the need for laws to curb the issue. Australia recently proposed the anti-non consensual AI porn bill. India has also proposed regulation of deepfake and AI content. But clearly, there’s more promptness and action needed from all countries to make things stricter and easier. Companies going all in for AI need to ensure nothing of a malicious nature should go unnoticed and have to train their AI models in a way that these occurrences are lowered if not put a stop at.

As I said before, the idea of this article isn’t to rant about the “women” issues but to help you understand how important it is to realise the chilling threat of deepfakes, especially for women. No amount of Women’s Day messages and gifting ideas (we see the latter a lot) will solve this. Ultimately, combating deepfakes requires a shift in attitude: stop treating them as mere entertainment and acknowledge the real dangers they pose!