Microsoft has unveiled Phi-3, a new family of small language models (SLM). These AI models can help developers with specific use cases, which do not require the full potential of large language models (LLM). The Phi-3 SLMs are trained on similar high-quality data over which Microsoft’s top-end AI models were trained. Here’s all you need to know.

Microsoft’s Phi-3 SLMs: Details

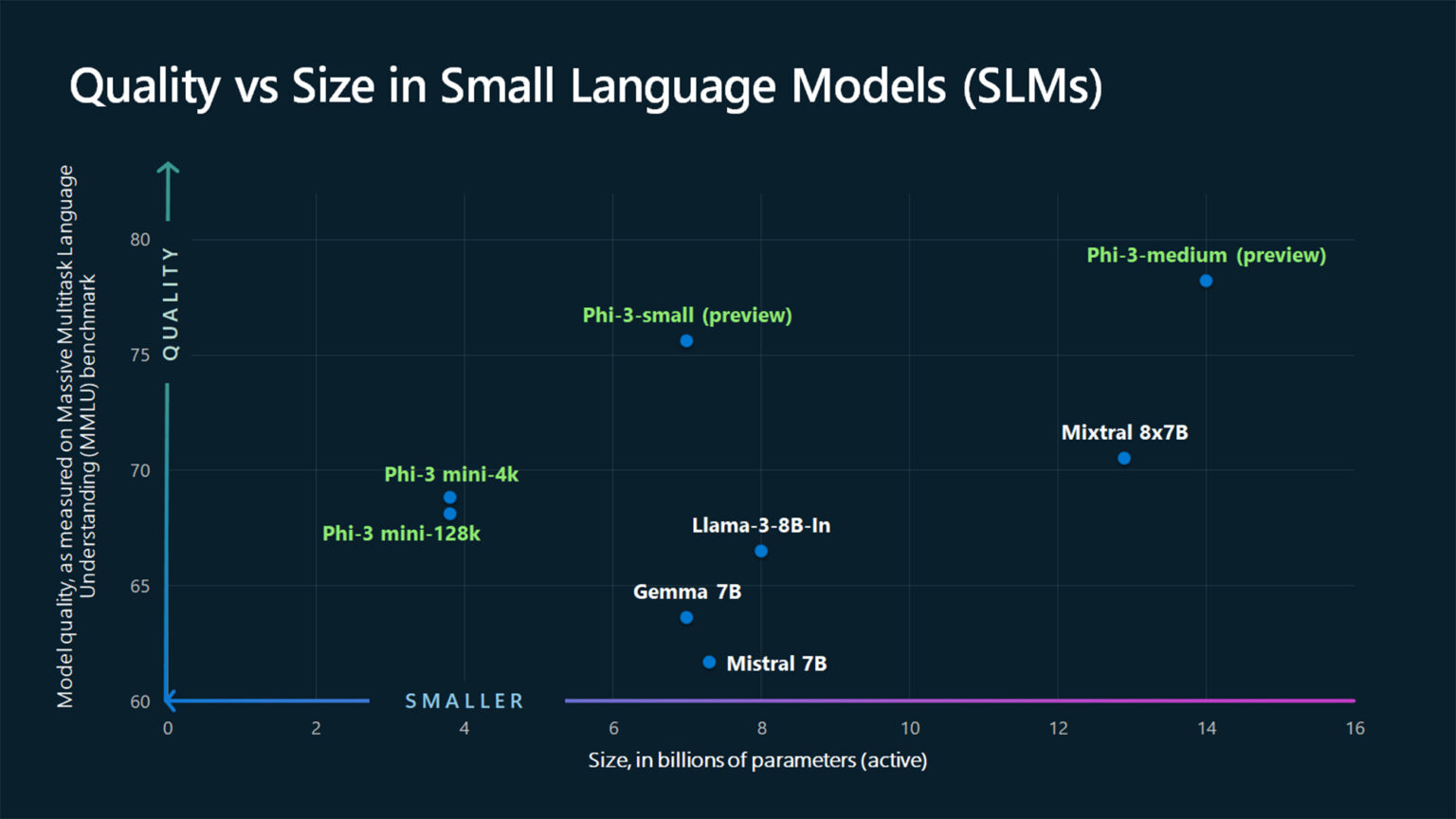

Microsoft says that its Phi-3 SLM can outperform models of similar size by offering better language, reasoning, coding, and math benchmarks. They can cater well to developers who need repetitive usage of the same functions, instead of using an entire LLM.

Phi-3 SLM will be available in three sub-models; mini, small, and medium. Microsoft has released Phi-3-mini on its Azure AI Studio, Hugging Face, and Ollama. Developers can use the SLM for specific AI features for their software. It supports tokens between a range of 4K to 128K.

Phi-3-mini is also optimized for Nvidia GPUs and Windows DirectML for maximum compatibility across various systems. It is instruction-tuned making it ready to deploy in any software as it can follow instructions contextually. It can also be implemented locally on mobile devices without an active connection to cloud servers.

Microsoft will also release Phi-3-small (7B) and Phi-3-medium (14B) over the next few weeks. All of these models are also compliant with Microsoft’s Responsible AI Standards.

How Does Phi-3 Differ From GPT, Llama, and Gemini?

The biggest difference between Phi-3 SSM and LLMs like ChatGPT, Gemini, and Llama is the amount of data they can process. Most legacy language models like GPT and Llama are designed for large-scale applications. They are better suited for tasks that require additional reasoning, logic, and other similar parameters.

However, Phi-3 is a small language model that focuses on a specific set of instructions. Hence, it can save processing time, computing resources, and energy, thus, reducing the running cost of the AI model. The implementation and working of each model are more or less the same, where they power an AI chatbot to respond to any query.

Microsoft has said that SLMs won’t be replacing LLMs. Instead, these small and mini models offer additional flexibility to developers to have AI models, specially curated for certain applications. The company further said that LLMs provide the highest possible accuracy in advanced-level reasoning. However, a carefully trained SLM is sufficient for most practical usages in daily life.

Is Phi-3 better than other language models?

If a certain language model is trained on better-quality data, it will provide more relevant and accurate responses. However, a general user cannot identify the exact data over which an AI model was trained. So, it’s difficult to notice the difference between AI models.

The end experience also depends on how an AI model is implemented, and the limitations imposed on it to optimize resource consumption.

For example, the free version of ChatGPT is powered by GPT-3.5 whereas the paid version runs on the advanced GPT-4 model. While it is difficult to differentiate between the models on a specifications basis, the difference in the quality of responses is huge between the two models. The GPT-4 version has fewer restrictions and has access to superior datasets, giving it a huge advantage over the GPT-3.5-powered version of the same chatbot, ChatGPT.