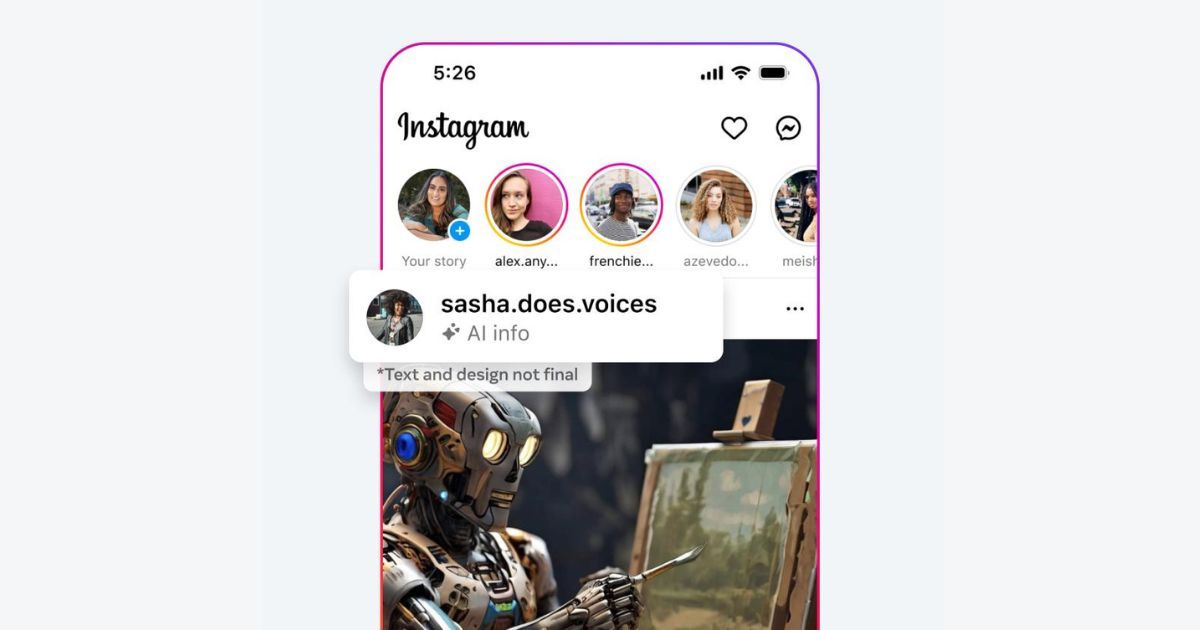

Meta has a new strategy to tag images created with the help of artificial intelligence (AI). The move is a response to the increasing popularity of AI image generation tools, making it harder to tell if content is human-made or AI-created.

Nick Clegg, President of Global Affairs at Meta, explained the motive behind the decision, stating, “As the difference between human and synthetic content gets blurred, people want to know where the boundary lies.”

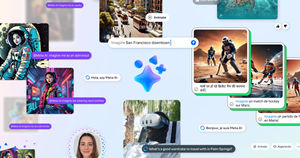

Currently, Meta manually labels images produced by its own AI generator, indicating that they were “Imagined by AI.” Now, the company plans to extend this practice to label AI-generated content from other providers like Google, Microsoft, Adobe, and various AI art platforms.

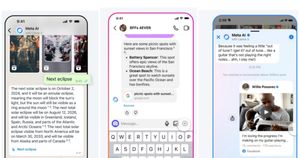

The blog post adds, “Being able to detect these signals will make it possible for us to label AI-generated images that users post to Facebook, Instagram and Threads. We’re building this capability now, and in the coming months we’ll start applying labels in all languages supported by each app.”

Eventually, Meta plans to label images from “Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock” as well, once these companies also “implement their plans for adding metadata to images created by their tools,” according to the post.

What About AI-Generated Video and Audio?

The challenge extends beyond images. Clegg admitted that detecting AI-generated videos and audio remains tricky, mainly due to the limited use of marking and watermarking techniques. However, Meta is changing its policy to address the gap between AI generation and detection capabilities. Users posting “photorealistic” AI-generated videos or “realistic-sounding” audio will need to manually disclose that the content is synthetic.

Furthermore, Meta reserves the right to label such content if it poses a high risk of misleading the public on important matters. Notably, failure to make this manual disclosure could lead to penalties, such as account suspensions or bans, in line with Meta’s existing Community Standards.

Why is it Important?

Today, we live in a world where distinguishing between an AI-generated image and a photograph taken by humans is becoming increasingly challenging, and it will be almost impossible in the coming time.

We’ve already witnessed instances illustrating the potential dangers, such as the widely circulated deepfake video featuring Rashmika Mandanna and the AI-generated explicit images of Taylor Swift that went viral on X (Twitter).

These occurrences are just the tip of the iceberg; the capabilities of AI pose even greater risks. Hence, it becomes crucial to adopt practices that facilitate the differentiation between AI-generated content and human content, especially on social media platforms like Instagram and Facebook.

What Other Tech Giants Are Doing?

Google has recently integrated image generation capabilities into its AI-driven chatbot, ‘Bard.’ The tech giant also incorporates SynthID with each image to distinguish AI-created images.

Similarly, Samsung recently introduced the latest Galaxy S24 series, which is equipped with AI features like ‘Generative Edit,’ which can enhance image backgrounds. To ensure fairness and transparency, Samsung adopts a watermarking strategy for images edited using this feature. Additionally, the company embeds a tag in the metadata, stating ‘Modified with Generative Edit.’

This dual approach makes removing the embedded signals challenging so other algorithms can know that the particular image has undergone manipulation using generative AI.