The last few months have seen exponential growth in the generative AI space. There are plenty of tools that make it possible for anyone to generate AI content within a matter of minutes. The content is so realistic at times that even the experts take a while to identify that it is not real. This is what makes it scary that anyone can use these generative AI tools to create content and spread misinformation with ease. In an effort to keep a check on AI content uploaded on YouTube and prevent its viewers from getting misled, the team has announced a new set of guidelines for the creators.

Disclose AI-generated Content on YouTube or Face Penalties

The latest official blog post from YouTube reveals the company has started framing new guidelines to keep a check on AI-generated content shared in videos. The goal is not to stop creators from using generative AI tools but to make them responsible and accountable for the kind of content they choose to share with the public.

YouTube says it will soon require creators to disclose AI-generated and synthetic content used in their videos. It will specifically be implemented for creators who post realistic-looking AI content. This is because anyone who is not familiar with such tools becomes easy prey and ends up believing in whatever is shown to them. In fact, even experts may find it hard to identify that realistic AI content is not actually real.

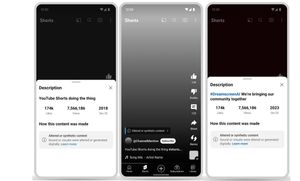

The post showed different mock-ups of the way YouTube will notify users about AI-generated content. One of the screenshots showed a disclosure label will be shown in the video description panel. The label reads, “Altered or synthetic content: Sound or visuals were altered or generated digitally.” Similarly, there is a label for content posted on YouTube Shorts and Dream Screen.

YouTube has further added simply adding a disclosure will not be enough for certain AI-generated content. For example, a synthetically created video that shows realistic violence may still get deleted by YouTube even if its goal is to shock or disgust viewers. Creators who fail to disclose AI-generated content repeatedly may have to face penalties like content removal or suspension from the YouTube Partner Program i.e. demonetization.

The company is further working on new options for creators, viewers, and artists to help them request the removal of content that simulates their identity. Once the request is submitted, the team will look at different factors to decide whether the content should be taken down.

YouTube said it is currently in early stages and will continue to evolve the approach with time. The aforementioned guidelines will be implemented in the coming months and early next year. This approach from the company is understandable considering how common AI-generated and deepfake content has become in recent times. A deepfake video of female actor Rashmika Mandhanna went viral on social media last week which led to a lot of influential people talking about the threat these new technologies pose.

![[Explained] Deepfakes: Rashmika Mandanna and More - What it is and How to Stay Safe](https://assets.mspimages.in/gear/wp-content/uploads/2023/11/Untitled-design-2023-11-06T174355.825.jpg?tr=h-115,t-true/)