Instagram and Facebook are known to have an adverse effects on people’s mental health and self-confidence across all age groups. For teenagers in particular, social media can be detrimental as this age group is particularly impressionable. Now, it looks both Instagram and Facebook are working to ensure a safer environment for teen users. In the latest attempt, both platforms have announced more restrictive content control for teen users.

Instagram and Facebook to Impose Harder Content Control Restrictions for Teen Accounts

Meta has announced it will now put teen user accounts into the most restrictive content control settings by default. Both Instagram and Facebook will hide harmful and age-inappropriate content from teen accounts.

Meta says it already hides such content in the Reels and Explore section of the apps. The restrictions will now apply to content and posts seen on Feed and Stories as well. It means even if some accounts that teen users follow share an age-inappropriate post, the teen user will not be shown that particular post or story. The change will be implemented on both Facebook and Instagram in the coming weeks.

These settings are known as “Sensitive Content Control” on Instagram and “Reduce” on Facebook. The harshest control was previously an optional choice for teen users, and had to be turned on manually. It is likely many users don’t even know such settings exist. But Meta will now make these as the default setting for teen accounts on the platform.

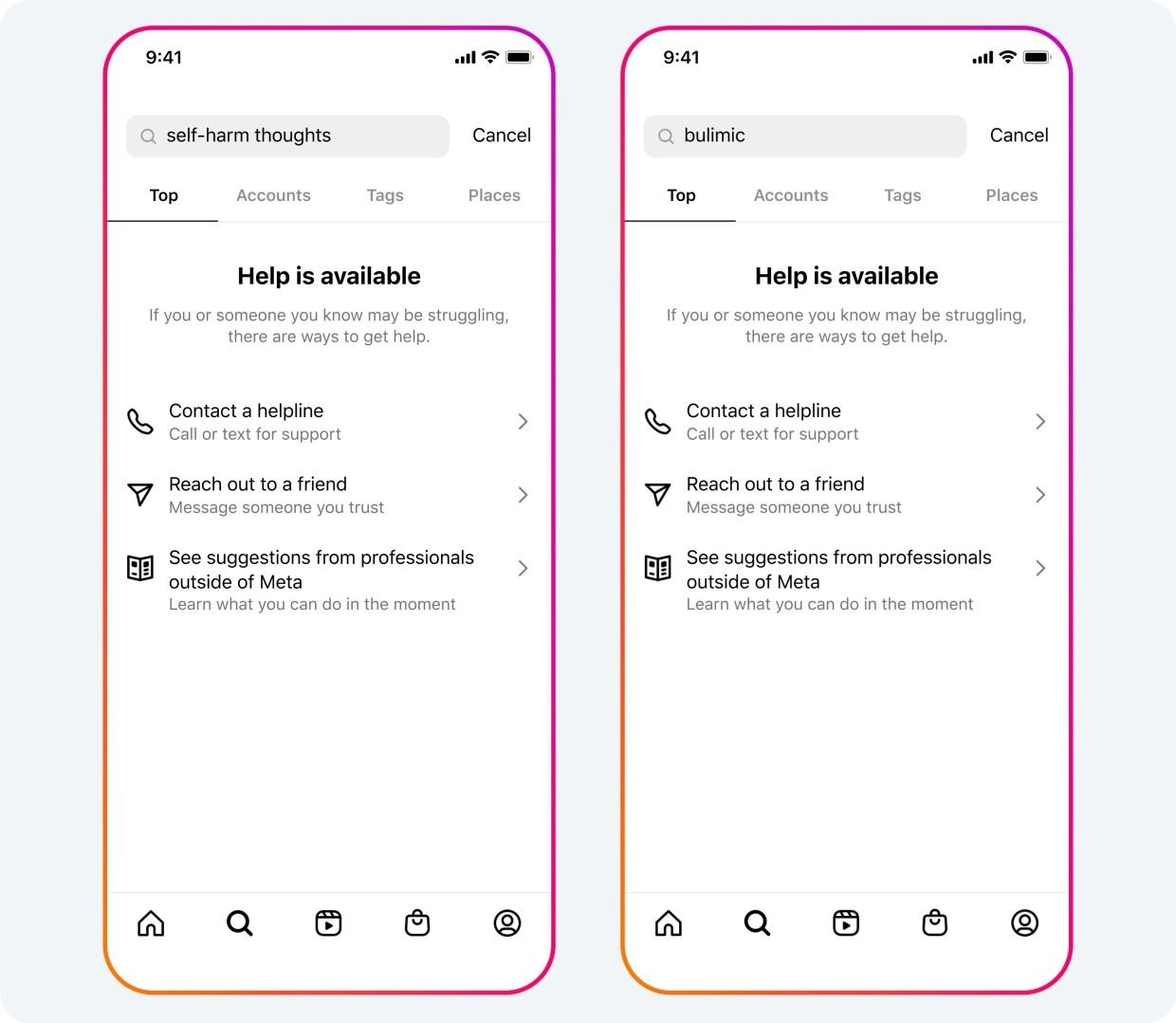

Meanwhile, Instagram is further strengthening its protection against suicide, self-harm, and eating disorders-related results in searches. It will now include more terms to avoid showing to teens when they search on such topics. Instead, the app will show expert help resources so that teen users can get timely help.

These changes come as Meta faces increased scrutiny on how its social media platforms impact teen users. Meta is already facing lawsuits from over 40 US states. The company is accused intentionally designing features to increase the time spent on apps for teen users.

A WSJ report from 2021 claimed Facebook was aware of how toxic Instagram was for teenage girls, but did not choose to do much course correction. The report cited internal Facebook studies that revealed the impact of Instagram usage on young female users. But Facebook defended itself against the report, and accused the publication of ‘cherry-picking’ facts.