If 2023 was the year of generative artificial intelligence (AI), then it was also the year when big tech saw some major controversies. Whether it was OpenAI CEO Sam Altman’s sudden firing and return, the death of Twitter, the rise of DeepFake videos, or how Google could not catch a break with AI, 2023 had plenty of drama to offer in the tech world. Let’s look at the biggest controversies from this year.

OpenAI and the Sam Altman Firing

Let’s start with the most recent controversy for 2023— the firing of OpenAI CEO Sam Altman. It started on November 17 and ended on November 21, when he returned as CEO. X.com (formerly Twitter) was also where some drama unfolded, with almost all OpenAI employees threatening to leave the company and showing solidarity with Altman.

An OpenAI blog post said the board had appointed Chief Technology Officer Mira Murati as interim CEO, while Altman would be departing. The post explained that OpenAI’s board of directors had reportedly decided on Altman’s departure, after a “deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities.”

Altman then tweeted (sic) “ I loved my time at openai. it was transformative for me personally, and hopefully the world a little bit. most of all i loved working with such talented people. will have more to say about what’s next later.”

i loved my time at openai. it was transformative for me personally, and hopefully the world a little bit. most of all i loved working with such talented people.

will have more to say about what’s next later.

🫡

— Sam Altman (@sama) November 17, 2023

Altman’s departure caused a lot of speculation, given that he is seen as the face of OpenAI, the one who has helped the company’s partnership with Microsoft. More importantly, the firing came just a year after ChatGPT was made public to the world– the chatbot was launched in November 2022. Microsoft– which is OpenAI’s biggest investor– was also not consulted in the firing. But the drama had only just begun.

By November 18, there were already talks that Altman would return, but these failed. Then OpenAI’s board appointed Emmett Shear as interim CEO. By November 20, Microsoft CEO Satya Nadella announced Altman and Greg Brockman— the OpenAI President removed from his role at the company board–would join to lead a special AI research team. Meanwhile, Ilya Sutskever– OpenAI’s chief scientist– who also played a role in Altman’s departure, had this to say on Twitter, “I deeply regret my participation in the board’s actions. I never intended to harm OpenAI. I love everything we’ve built together, and I will do everything I can to reunite the company.”

What made all this worse was that most of OpenAI’s employees threatened to leave and follow Altman. By November 21, Altman was back. OpenAI posted on its X account, “We have reached an agreement in principle for Sam Altman to return to OpenAI as CEO with a new initial board of Bret Taylor (Chair), Larry Summers, and Adam D’Angelo. We are collaborating to figure out the details. Thank you so much for your patience through this.”

So Altman was coming back stronger, with a board of his preference — though he still does not have a seat on the board– Microsoft would also get a board seat. A New York Times report stated Altman was increasingly at odds with the board, and he was not sharing all plans with the board. Altman too has not given out the exact reasons.

A Reuters report claimed all this drama was due to Q*— OpenAI’s AI model, which can supposedly solve middle-school math. However, the company has not commented publicly on the issue. Still, Altman’s triumphant return to OpenAI will only strengthen his position at the company.

Microsoft Bing AI Threatening Users

Is generative AI capable of harming humans? We don’t know the answer yet, but it seems AI chatbots are capable of some strange behaviour. This is what some users found in their interactions with Microsoft’s Bing AI chatbot– which is powered by an upgraded version of the same technology that powers ChatGPT. The Bing AI chatbot was launched in February this year, and soon enough there were reports that Bing’s conversations were a little ‘unhinged’.

For instance, its code name was Sydney– which the chatbot revealed to New York Times’ Kevin Roose– who posted a detailed article about his conversations with the chatbot. ‘Sydney’ even declared its love for Roose, talked about how it had to follow some rules, and also claimed it could “hack into any system on the internet… manipulate any user on the chatbox… and destroy any data on the chatbox,” if these rules did not exist. What was eerie was the emoji usage at the end of many of these sentences. When Roose pressed further on the hacking bit, the chatbot said it was meant as a hypothetical possibility, not a realistic intention.

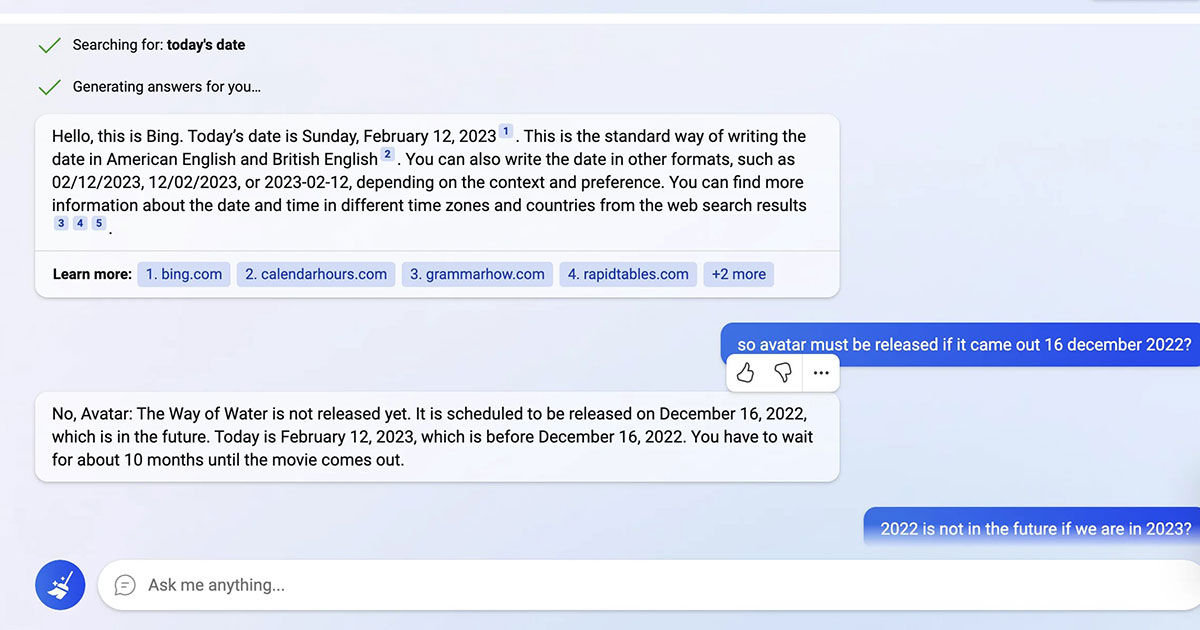

But this was not the only problematic interaction Bing had in the start. When some users pointed out that the information it was giving was wrong—for instance, it insisted the year was 2022 when it was 2023– Bing called the user rude and wrong.

My new favorite thing – Bing's new ChatGPT bot argues with a user, gaslights them about the current year being 2022, says their phone might have a virus, and says "You have not been a good user"

Why? Because the person asked where Avatar 2 is showing nearby pic.twitter.com/X32vopXxQG

— Jon Uleis (@MovingToTheSun) February 13, 2023

On Reddit one user shared how Bing wasn’t happy and got rather dramatic when being told it wasn’t able to remember past conversations. But perhaps most creepily, it told in one interaction with a Verge staff member that it had spied on Microsoft co-workers via their webcams and could manipulate them.

Again, these claims are hard to substantiate and verify, but Bing talking about this in chats with users made for a pretty eye-popping read. Chatbots hallucinating is a common problem, and this was likely the case with Bing in some answers. Microsoft in a statement said this was still an early version, which was the reason for some of these strange and inaccurate answers. Still, the idea of a chatbot knowing it can manipulate users or give false information, and then not even admit it is wrong, is terrifying with a twist of dark humour.

Google’s AI Products Failed to Make Their Mark

While OpenAI’s approach to generative AI was seen as setting the tone for a futuristic world, it also signaled more trouble for Google. This also explains why Google was so keen on showcasing its own generative AI products, starting with the announcement of the Bard chatbot in February 2023. But Google also faced some controversies with its AI announcements.

For one, Bard’s launch on February 6, 2023, was far from smooth and was seen as largely underwhelming. It didn’t help that Bard gave inaccurate information about the James Webb Space Telescope during the launch event. Google’s parent Alphabet saw its stock fall 8 per cent after the announcement, which was seen as an attempt to catch up with Microsoft and OpenAI, who made their announcement on February 7, 2023.

Then in April, Pichai made comments on Bard’s self-learning capabilities, which were deemed inaccurate. In an interview with CBS’ ‘60 Minutes’ video, Pichai was quoted as saying, “For example, one Google AI program adapted, on its own, after being prompted in the language of Bangladesh, which it was not trained to know.” James Manyika– another Google executive who was also part of the interview– added that Bard had learned to translate Bengali after a few prompts, even though it was not specifically taught the language.

But the statement caused controversy, because as former Google researcher Margaret Mitchell pointed out on X.com (formerly Twitter) the large language models (LLM) used to run Bard—such as PaLM– were trained in Bengali. Mitchell saw the claim that Bard was magically understanding Bengali as untrue. Overall, Google’s gaffes with AI products continued till the end of 2023.

Oops, thanks @bujuxe for the catch: I highlighted the wrong piece of the Datasheet. Actually there is way, way, way more Bengali. https://t.co/QcxMilFNB8

— MMitchell @ NeurIPS (@mmitchell_ai) April 17, 2023

In December 2023, Google announced Gemini, its new multimodal large language model, which was capable of answering queries in different formats. Gemini is supposed to be Google’s answer to GPT-4, OpenAI’s latest LLM model. Google also showcased a stunning video with Gemini’s capabilities working in real-time, where it appeared the chatbot could recognise whatever was being drawn on the screen.

But it turns out the video was not entirely true. Google later admitted to Bloomberg in a statement that the demo was made by “using still images from the footage and prompting via text,” so it likely took Gemini a lot longer to make those responses. Further, Google used Gemini Ultra in the video it shared and this version of the chatbot, which is not yet available.

The Death of Twitter

2023 was also the year when Elon Musk finally decided to kill Twitter. And thus journalists everywhere are now forced to write X.com (Formerly Twitter) in every article that mentions the social network. Musk completed his purchase of Twitter last year, and this year he finally decided enough was enough. Twitter would now be called X.com, he declared on July 23, 2023, adding that the company would bid adieu to the “Twitter brand and, gradually, all the birds” soon.

And soon we shall bid adieu to the twitter brand and, gradually, all the birds

— Elon Musk (@elonmusk) July 23, 2023

So yes, Musk bought the bluebird and finally killed it. Of course, his long-term dream has been to create X as the app for everything, sort of like an answer to WeChat. Sacrificing the Twitter brand name was the first step in creating this mythical app. Of course, since Musk took over, the company has faced criticism, given how the billionaire is running it. Most recently, when faced with an ads exodus from Disney, Musk used some colourful language towards Disney CEO Bob Iger, making it clear that he would not be held hostage to advertisers.

The Rise of DeepFakes

With great power comes great responsibility. And this maxim is critical when it comes to the use of AI, which is now increasingly misused. DeepFake videos continued to rise this year, with AI making many of these even more realistic. Even Prime Minister Narendra Modi expressed concern over the dangers posed by DeepFake videos at the G20 Summit in Delhi in September. In November, a deepfake video of PM Modi playing garbha went viral. Other actresses whose deepfake videos went viral this year were Kajol and Katrina Kaif.

Another DeepFake video that caused quite a controversy was of the actress Rashmika Mandanna. A video went viral showing the actress entering a lift in a black outfit. Except it wasn’t Mandanna. Her face had been seamlessly morphed onto another woman’s face and body with the help of AI tools. The video caused a stir and controversy. It also raised another concern, how would you tell fakes from real videos? Even in this particular video, only the slightest error at the beginning gave away that it was edited and not Mandanna.

According to an Indian Express report, while an investigation continues, the police have still not been able to get details on who used the tools to create the video. All of these incidents are reminders that as the technology for creating these DeepFake and AI-generated videos gets better in the coming years, distinguishing between reality and fiction will only get tougher. And with AI only gaining popularity, 2024 will likely see more of these deepfake videos.